If you’re managing, building, or considering buying customer data tools and infrastructure, you’ve probably heard the term “zero copy.” You may have also noticed that different software companies seem to use it to mean different things. Here’s how to understand zero copy and what to look for in the technical capabilities behind the buzzword.

Zero copy defined

“Zero copy” is a product marketing term that does a lot of heavy lifting trying to describe the capabilities data engineering teams are looking for. In fact, it’s applied to solutions for a few different related but distinct technical problems.

So what does it mean for you? There are three benefits that zero-copy is applied to in the context of customer data, all of them stemming from current technology infrastructure needs:

Composability: Being compatible with the rest of the customer data architecture, with a specific focus on seamless interaction with a data warehouse

Storage Security: Ease of control over who has access to any copy of your customer, data, along with an overall reduction of copies that need to be secured

Minimizing Complexity: Saving data engineering teams time and effort on integrations and alleviating the overall maintenance burden

To distill this into a single definition, “zero copy” is an umbrella term covering a collection of features that minimize persistent copies of data and maximize the interoperability and security of the data.

It starts to get muddled because, depending on the SaaS vendor, “zero copy” in their product may only reflect one or two of the benefits above. It’s important to ask questions about all three benefits to make sure that you’re getting the most out of your tech.

Let’s dig a bit deeper into each of these challenges and solutions.

Composability

Most companies have accumulated numerous SaaS tools over time, which results in a massive amount of data about a customer. The problem is that there needs to be a centralized storage location to aggregate data and share it back out so that each tool is operating off the same data set. Without this, each tool relies on its own stored copy of the data, creating uncertainty about which data set is the most accurate and up to date.

This has led to the rise of cloud data warehouses like Databricks, Snowflake, AWS Redshift, Azure Fabric, and GCP BigQuery. These platforms offer prodigious scale, cheap storage, and configurable compute, and have built extensive partner networks of SaaS tools that integrate with them.

Zero copy in the context of composability refers to a tool's ability to either use the data warehouse as the storage for the application, or provide a direct, governable connection between the storage layer and the SaaS application.

For example, SaaS ETL and Reverse ETL tools are built to provide connectors for copying data in and out of the data warehouse. Major CDPs like Salesforce Data Cloud and the Adobe cloud provide the ability to directly query data without first copying it into their infrastructure, but ultimately still store their own copies. In both cases they call it “zero copy”.

Storage Security

Companies have a lot of data, and that data needs to be secured to protect their customers and protect the company legally. There are major laws like GDPR and CCPA that increase the burden on security teams, as well as other local laws modeled after these, so the topic of how to secure all of this data is increasingly important.

Zero copy in the context of storage security focuses on minimizing the amount of copies and keeping the persistent copies on storage owned by the data team, rather than storage belonging to a SaaS tool. Fewer copies mean fewer places to secure, which translates to less risk. Storage in locations managed by a company means their own team gets explicit control over access, monitoring, and security rules.

Minimizing Complexity

The more moving parts an architecture has, the bigger the burden on data engineering teams. More jobs copying and modeling data means more jobs to monitor, support and maintain. For a sufficiently large company it can get so complex that it’s actually difficult to even diagram in a comprehensive way.

Zero copy in the context of minimizing complexity means that a SaaS application can directly access data from a source location with no “job” or process to manage. For example, if you need to write an integration job then later need to change one of the data models involved, you will have to update the other parts of the system accordingly. If you copy data from your data warehouse, then add or remove a field, you will have to adjust the jobs and applications that are reliant on that data.

If you can simply use data sharing to provide an application with access, that application can keep references to that data up to date without extra work to adjust the copy job.

This also affects timelines. If you are making a 1:1 copy of a dataset to integrate, that’s extra processing time on a workflow, which can be limiting, especially for use cases that rely on data being as fresh as possible.

Amperity Lakehouse CDP and zero copy

As mentioned above, it’s important to ask any customer data tool vendor you’re evaluating how they solve for all three of the challenges referred to by “zero copy.” As an example, let’s look at how the Amperity Lakehouse CDP handles it all.

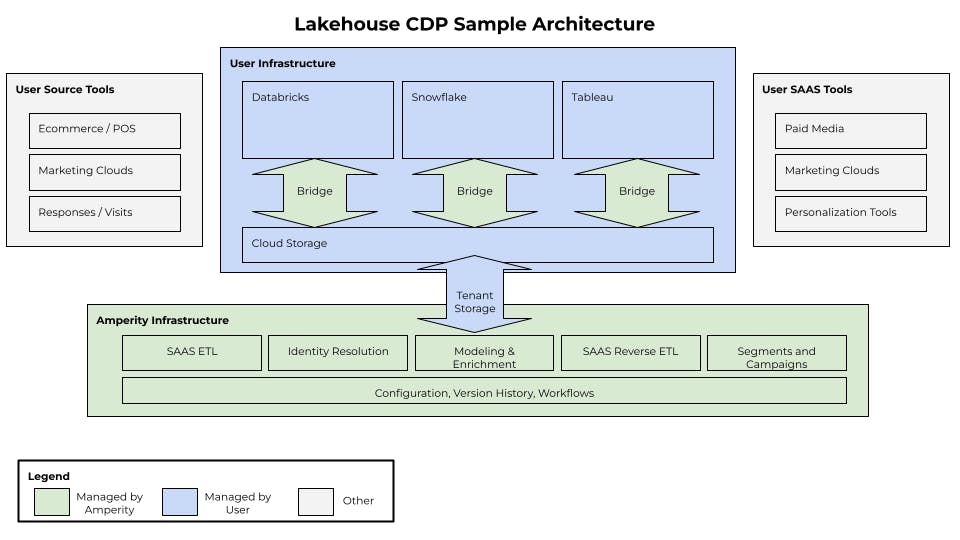

Lakehouse CDP architecture that unlocks all three benefits referred to by "zero copy."

Lakehouse CDP architecture that unlocks all three benefits referred to by "zero copy."Composability with Amperity

We developed our approach to composability based on the fact that most companies haven’t settled on a single data warehouse for their entire company — the majority of Amperity customers using Databricks also use Snowflake or another data warehouse somewhere else in their company. The other key consideration is that cloud data warehouses pretty much universally use cloud storage as the storage layer for their offerings.

With these two factors in mind, Amperity Lakehouse CDP uses open-source table formats in cloud storage for all data. The recent launch of Amperity Bridge means those same tables can be used without copying the data in multiple warehouses. For Databricks that means Delta Sharing, and for Snowflake that means Iceberg Tables; in either case it means using a data sharing mechanism for governance and access, but the tables themselves are always in cloud storage.

As a result of using open-source table formats in cloud storage, live data is available across your infrastructure.

Storage Security with Amperity

Amperity stores things like:

Configuration rules of the tenant and version history

Workflows and automations

Segment and query rules

All of the actual data gets stored in the storage layer, which is a cloud storage location in a customer’s own cloud account.

While we are remarkably secure (SOC2, HIPAA, and other key certifications), if your security teams want to wall off the data, all they would have to do is revoke access to the storage layer and Amperity would be unable to see the data.

Minimizing Complexity with Amperity

Amperity provides a massive library of SaaS ETL and Reverse ETL connectors for getting the raw data from source systems and sending customer profiles and segments to destinations. Amperity ID resolution also handles turning raw data into a standardized, unified model without code.

The end result is the least amount of “jobs” and “copies” of any CDP solution on the market and infrastructure that will easily plug into your other SaaS tools to send and receive data as needed. Combined with the storage and composability this means just one location to maintain, without work to propagate changes to any applications consuming the data.

The 3 zero copy questions to answer

As SaaS technology has advanced, the complexity of the conversation has grown with it. “Zero copy” is a product marketing term meant to simplify that conversation, but it also acts as a bit of a smoke screen to elevate a given platform’s technical story while leaving the audience room to interpret it in their own way.

The key questions in the zero copy conversation:

How interoperable is the solution with your other infrastructure?

Are copies of data stored in locations your security team doesn’t own?

How much complexity is involved via extra “moving parts”?

Amperity’s Lakehouse CDP is the first offering to provide answers to all of these. Keep the data stored where you own it. Minimize the amount of copies, jobs, and code needed to make it work. Provide the best data to all of the tools you need with the most seamless experience.